The firmware will require some tweaking, it’s not quite able to do what you want. Right now you can use one axis with two encoders but you lose access to the other axis.

Okay, thank you. We’ll keep that in mind for our design choice.

I’m currently looking into tweaking the firmware as such. Though possible, it seems that the firmware is not build with the intention of supporting both an absolute and incremental encoder per axis. Do you think it will be desirable to others to split the encoder class in an absolute and incremental encoder and implement them as such?

The reason I’m asking this, is because for our project we will require the use of both. So we’ll either just tweak the firmware like you indicated or adjust the encoder class entirely and possibly contribute that to the ODrive project.

Adjusting the encoders entirely would be more beneficial to us in the long run anyway. Though it would take a lot more time and effort.

This is the next thing we’re doing.

Encoder will be given a base class, and separated further from Axis. Then we can make any number of encoders and use them in each axis. If you’d like to help contribute to that, @Samuel is the guy to talk to.

We’ll be discussing whether we intend to actively participate or not, on Monday.

We’ll also soon release a post about our project. Now that we’ve definitively chosen to use the ODrive.

Sorry for the delay.

We would like to contribute to the encoder refactor @Samuel. We, currently being myself and @XJey5. We would like to start on the refactor in January. We hope we can be of help

We’ve posted a general introduction about our project if you’re interested:

Hey! Sorry for the late reply.

Cool that you chose ODrive for your project and thanks for offering to help!

From my perspective the objectives of the refactoring are:

- Split the

class Encoderinto several smaller classes that each have a clear easy-to-understand purpose. - Decouple the hardware-specific encoder drivers (quadrature encoder, hall-effect encoder, various SPI encoders, …) from estimation algorithms (e.g. the PLL and interpolation algorithms).

so that for instance the SPI protocol code is disentangled from the SPI have a clearly defined purpose and to disentangle hardware-dependent code (like “get the quadrature encoder count”) - Decouple the encoders from the axes: Apart from the label on the PCB, the links “M0<=>ENC0” and “M1<=>ENC1” in the code are purely artificial.

- Have support for dynamically defining the number and types of encoders that the user wants to use. For instance there can be only two quadrature encoders (because they depend on MCU timers) but there can be many more SPI encoders (each with its own nCS line).

The last point is a bit tricky to get right so as an intermediate step I would propose to have a predefined collection of encoders (quad_enc0, quad_enc1, spi_enc0, spi_enc1, hall_enc0, hall_enc1) as toplevel objects in class ODrive. In addition there could be separate objects for the estimator components (the estimator stuff could be further split into separate components but that can happen at a later point).

Each of those toplevel objects can have an OutputPort<float> or OutputPort<uint32_t> or similar on which it exposes the raw unprocessed encoder value. In addition it might need a few flags that describe the encoder (e.g. does it maintain the absolute offset across reboots? is it a linear or circular value?). In the setup functions like start_closed_loop_control() these outputs can be connected to the inputs of the estimator components and the estimator components can be connected to the controllers and motors according to the user configuration.

To indicate which controller/motor uses which encoder, we can for the time being just assign an integer to each of the encoders.

Depending on your use case you might want to add an additional component that fuses the estimates from two encoders (we have interest in encoder fusion too, but that would be a separate discussion).

Let me know if you have further questions!

We’ve already made a head start.

Split the class Encoder into several smaller classes that each have a clear easy-to-understand purpose.

We had a slightly different way in mind. Namely, using a parent encoder class with additional inherited classes for specific encoder types. This allows an axis’ controller to interact with the encoder regardless of type and we won’t have to define similar variables like shadow_count across multiple encoder types.

Decouple the hardware-specific encoder drivers (quadrature encoder, hall-effect encoder, various SPI encoders, …) from estimation algorithms (e.g. the PLL and interpolation algorithms).

so that for instance the SPI protocol code is disentangled from the SPI have a clearly defined purpose and to disentangle hardware-dependent code (like “get the quadrature encoder count”)

As mentioned above, we intend to keep general encoder entities into the parent class while moving encoder type specifics to their specific inherited classes.

Though we currently have limited ourselves to splitting them into incremental and absolute encoders.

Decouple the encoders from the axes: Apart from the label on the PCB, the links “M0<=>ENC0” and “M1<=>ENC1” in the code are purely artificial.

Have support for dynamically defining the number and types of encoders that the user wants to use. For instance there can be only two quadrature encoders (because they depend on MCU timers) but there can be many more SPI encoders (each with its own nCS line).

We’re trying to add an encoder_manager class which has the purpose of properly regulating the switching between encoders for each axis. So that the axis requires no knowledge of how many or what type of encoders are present but can just select one of the encoders available. (The encoder_manager would handle all safety issues like checking when axis wants to use encoder5 if it exists and whether it is ready.)

The last point is a bit tricky to get right so as an intermediate step I would propose to have a predefined collection of encoders (quad_enc0, quad_enc1, spi_enc0, spi_enc1, hall_enc0, hall_enc1) as toplevel objects in class ODrive. In addition there could be separate objects for the estimator components (the estimator stuff could be further split into separate components but that can happen at a later point).

We had a similar idea but then divided in 2 incremental encoders and up to 4 absolute encoders.

As you might have noticed, our user case is oriented to using an incremental and absolute encoder together on a single axis. We’ve no particular interest in encoder fusion as we use the incremental encoder for velocity estimates of the motor and the absolute encoder for position estimates of the mechanical joint which the motor is a component of. With the exception of current control, we run our control separately on a SBC.

We had a slightly different way in mind. Namely, using a parent encoder class with additional inherited classes for specific encoder types.

This might already be an improvement over the current state but there are a couple of reasons why I would prefer a composition approach over an inheritance approach.

Consider this example:

raw count vel estimate

Incremental Encoder ============> PLL ==============> Velocity Controller

| |

vel estimate | torque setpoint |

v v

Hall Effect Sensors ==========> interpolate ========> Current Controller

state (0-5) phase

In this configuration the hall effect sensors don’t need to implement a PLL and the incremental encoder doesn’t need to implement interpolation. Implementing them would add complexity and CPU and memory usage.

Other examples are the sensorless encoder, which doesn’t need a PLL, and an ACIM setup where there needs to be an ACIM estimator between the encoder and the current controller to estimate the rotor flux angle.

If you’re interested, here’s a more general discussion on the software architectural implications of composition vs inheritance.

On a high level we’re trying to move towards a more “flow graph” style design where advanced users can customize the data paths in any way they like. We’re not gonna get there any time soon but use this goal to inform design decisions.

We’re trying to add an encoder_manager class which has the purpose of properly regulating the switching between encoders for each axis.

Just keep in mind that an axis consumes encoder data in up to three places: position controller (pos estimate), velocity controller (vel estimate) and current controller (phase estimate). Some applications require the phase estimate to come from a different encoder than the pos and vel estimates (currently this is implemented in a rather hacky way via the load_encoder config variable).

One of the main reasons for us to use inheritance at this point is the ability to implement the most basic functions in the parent encoder class, such as get_schadow_count and set_error, do_checks, check_pre_calibrated and a few more. Where needed these functions can be extended by the child classes even with the ability to use different compositions.

However (in case we would not use inheritance) when you need information from an encoder for the velocity encoder, you need to store multiple different type of encoders in your encoder class in order to get the correct information. While if the controller would just store a parent encoder type class the controller does not need to check every different encoder type, but in can just ask for the vel_estimate, regardless of the underlying logic.

This would still give you the opportunity to implement the PLL in a different way, since it would be a sub class of the incremental encoder.

And unfortunately do we not have enough time to also restructure the PLL and other parts of the code at this time.

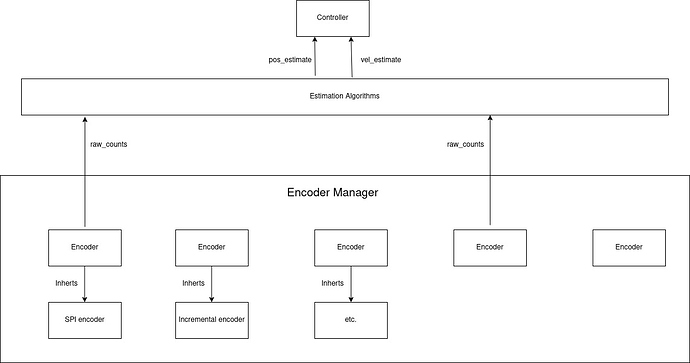

Our new proposition would be to use the following construction, in order to use the best of both worlds:

PS: Here you can find our progress so far. At the moment we are in the works of merging the first edits.

I’d like to further elaborate on our reasoning behind this proposed structure.

We don’t have much knowledge on encoders in general. So it will require quite a bit of effort for us to split up the estimation algorithms based on types. We therefore thought it’d be more useful and time efficient for us to focus on the modularity of switching encoders.

With the proposed structure we’d only use the encoder classes to sample their respective data and send that raw data, combined with an indicator of the encoder’s type, to the estimation algorithms. Selecting and checking encoders could still be handled by the encoder manager. For the estimation algorithms we would just implement a single class, which in the future, can always be further split up in a proper composition.

If anything is unclear or you disagree feel free to let us know

With the proposed structure we’d only use the encoder classes to sample their respective data and send that raw data, combined with an indicator of the encoder’s type, to the estimation algorithms.

Yeah this separation is the most important one I was trying to get at that should be orthogonal to the class hierarchy.

So I think the diagram that @XJey5 sent is a good intermediate structure to work towards. You might find that a few more connections next to raw_count are needed, specifically I’m thinking of a bool flag that says “this encoder maintains absolute offset between reboots”. This is so that the estimator algorithms can properly decide when an encoder can be used for phase estimation. This could also be a config flag on the “Estimator Algorithms” object. It’s possible that the Estimator Algorithms needs to support both integer and float inputs. This will become clearer as you progress with the implementation.

The Encoder Manager I would implement just as a single function get_encoder (in board.cpp or main.cpp) that returns an Encoder* from an int.

Additionally the sensorless and ACIM modes need to keep working, so they would need to inherit the Encoder interface too.

We are struggling a bit with the placement of the estimation algorithm class. In the diagram shown in a previous post, we put the class outside of the encoder parent class. However in order to maintain/implement as much composition as possible, we can also put the estimation algorithm class inside the encoder class, thereby still outputting the velocity estimate values via the encoder, but calculated by the estimation algorithm class inside the encoder.

This will result in less changes inside other classes “neighboring” the encoder class, like axis.

What do you think?

With the future direction of the firmware in mind I would say putting the estimator algorithm class outside of the encoder parent class is more future-friendly.

Hi there, here is both an update on our progress as well as a problem we’ve run into.

We have currently implemented the encoder parent class, the inherited incremental encoder class, an encoder processor class (features estimation algorithms, update functions etc.) and a encoder manager class which is basically the interface between these classes and for anything wanting to use encoder data. As we now have the general structure up and running for a single type of encoder we can now actually start testing whether everything functions like it should.

We have, however, run into the problem that adding a new case in the update function (for a undefined encoder state, which is necessary for the initial encoder parent class) breaks the communication between the ODrive and the odrivetool. Below I’ll add the code snippit.

bool EncoderProcessor::update(){

// update internal encoder state.

int32_t delta_enc = 0;

int32_t latched_count = raw_count.any().value(); //LATCH value to prevent override

switch (encoder->config_.mode) {

case Encoder::MODE_UNDEFINED:

{

} break;

case Encoder::MODE_INCREMENTAL: {

//TODO: use count_in_cpr_ instead as shadow_count_ can overflow

//or use 64 bit

int16_t delta_enc_16 = (int16_t)latched_count - (int16_t)shadow_count_;

delta_enc = (int32_t)delta_enc_16; //sign extend

} break;

If we comment the case Encoder::MODE_UNDEFINED block and flash the odrive with the firmware, no issues are present (besides the impact of that part of logic missing).

Do you have any clue why it might screw up the communication between the odrive and odrivetool?

So I’m not entirely sure what the problem was, however, it has been fixed by simply adding a return statement to the MODE_UNDEFINED case. This is allowed as the undefined encoder has nothing to update and it has the added benefit of skipping the unnecessary successive body of the update function.

We are currently running into the problem that the firmware crashes whenever we try to connect inputports to outputports during runtime. I assume this has to do with their member functions not being thread safe as documented. We’ve been trying to find a runtime friendly solution. We’ve tried implementing the problematic part of the code using a critical section to no avail. Similarly reading them directly using pointers has proven unsuccessful.

Do you guys have any suggestions?

Cheers

Hi fellas,

It’s been some time since our last update so here goes.

We’ve managed to successfully implement our intended structure with functioning incremental and absolute encoders. Which is great news.

We’ve run into new problems though, while trying to increase the amount of configurable encoders from 2 to 6. The problem seems to be in using the asynchronous transfer of the spi_arbiter by more than 2 absolute encoders.

Debugging is still rather difficult due to our feedback being limited to the odrivetool no longer reacting as a sign that the firmware got stuck in undefined behaviour or something like that.

As usual any suggestion on the spi difficulties or a way to maybe make debugging a bit easier are very welcome.

Cheers

You’re not using an STLinkV2 for debugging with GDB?

We have not used an STLink yet. Though I’m currently trying to get it up and running. I’m using vscode but when setting breakpoints, it indicates that no source file can be found with the name of in which the breakpoint is set.