Hi there,

I am building a drum-playing robot that will eventually incorporate 10 motors or so. I plan to use either a Teensy or RaspberryPI to decode MIDI signals and distribute instructions to the appropriate motor. I have been researching the implementation details, and I’m hoping to solicit your advice on a few issues. I also have a couple of specific drive questions.

-

Time Synchronization: There’s a few issues here. First off, the time it takes for a drum stick to travel depends on the speed (loudness) of the hit. I plan to characterize these times using a piezo sensor on the drum head, and lookup (or calculate) the appropriate delay time for each motion. But, I don’t think that maintaining the timing on the MIDI controller and broadcasting move commands over USB will provide sufficient timing accuracy, especially as the number of motors increases. Humans can perceive timing differences in sounds separated by a few milliseconds. Is there any clock synchronization capability written into the existing firmware? Has anyone successfully implemented coordinated, multi-axis movement with more than one odrive in the past? Do you know what the existing latency is, and how much it fluctuates? Does UART present any advantages over USB for realtime control?

-

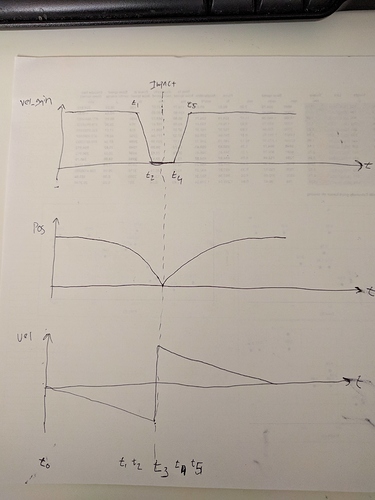

I haven’t found much documentation on the “feed-forward” arguments in the python library, but I suspect they may be appropriate for the motion control I’m looking for. In particular, I want to drive the drum stick towards the drum head at a specific speed (velocity control), send the motor into free-rotation just before it hits the drum head to allow for a natural bounce (torque goes to zero at a specific position) and then retract the stick to the starting position (position control). The documentation encourages one control mode (Position/Velocity/Torque). Am I correct in understanding that the position mode offers control of all three modes, with the feed-forward values setting limits of torque and velocity when moving to a specific position? If a feed-forward value of 0 is “no-limit” how can I set the motor to free-wheel (0 torque)? Can you change control modes quickly between moves?

I’m a few years into what I’m calling “my 10-year project,” so I know I’ve got a lot of work ahead of me. I was well on my way to writing my own teensy-based motor controller for a brushed-DC implementation when I discovered odrive, and couldn’t pass up the opportunity to use high-torque, quiet, brushless motors. As for my background: I’ve got some embedded programming experience, but I’m not an expert. I have the summer off (my day job is teaching physics), so I’m hoping to make good progress on this robotics project over the next few months. My odrive just arrived today, so my gears and turning and I’m excited to get to work!